- Default Isn’t Always Private: Despite settings, Meta AI chats surface publicly because of unclear sharing controls and multi-step opt-in processes.

- Sensitive Data at Risk: Users have accidentally exposed medical details, legal disputes, personal confessions, and even tax-evasion plans in a globally accessible feed.

- Identity Linkage: Chats made while logged into Facebook, Instagram, or WhatsApp can be linked back to user profiles, compounding privacy and security risks.

- Inadequate User Guidance: Meta’s in-app alerts and FAQ links fail to clearly inform users when a conversation will become public or how it may be used for AI training.

- Regulatory Gaps: The incident underscores the need for stronger AI privacy standards akin to those in data protection laws—mandating default privacy, explicit consent, and clear UX safeguards.

With users unwittingly sharing medical, legal, and personal dialogs to a global audience, Meta AI’s Discover feed raises urgent privacy and UX questions.

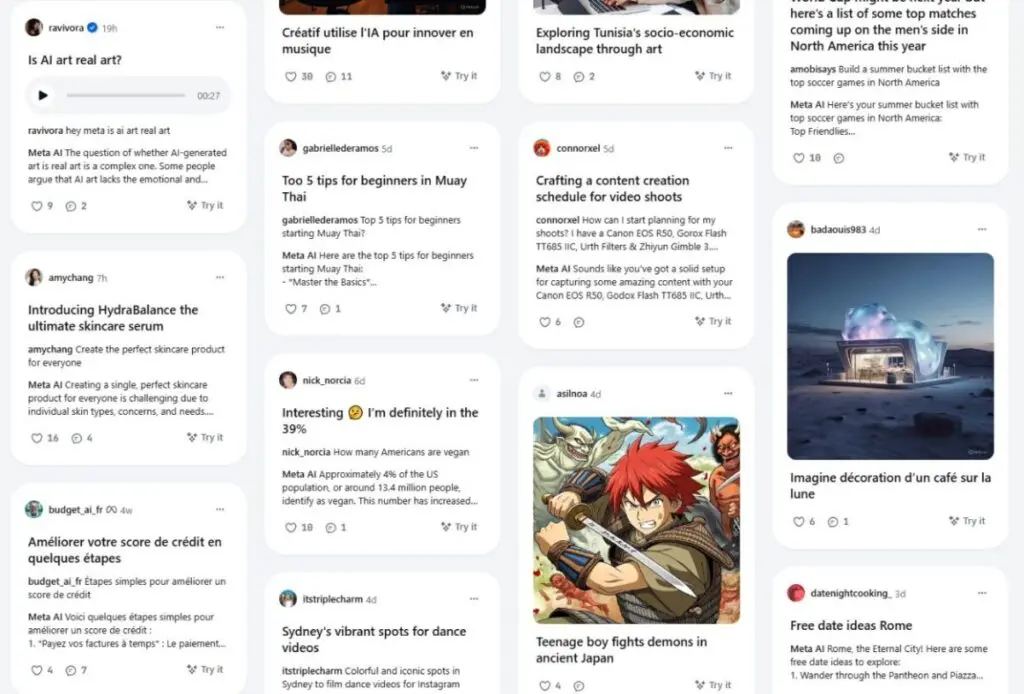

Meta AI launched with the promise of a conversational assistant embedded across Facebook, Instagram, WhatsApp, and its standalone app. Yet thousands of users have discovered that pressing the “Share” button in their AI chat doesn’t just link friends—it posts intimate exchanges to a public “Discover” feed.

From tax-evasion tips to medical concerns and job-arbitration strategies, deeply personal prompts are now visible to anyone scrolling the feed, often without the original poster realizing it. This article unpacks the design flaws, real-world impact, and privacy implications of Meta’s public chat stream—and offers a blueprint for users and regulators to restore trust.

Design Flaws & User Confusion

Meta AI’s sharing workflow is deceptively simple: after typing a prompt, the user taps a Share icon, previews the post, and then publishes. Yet nowhere does the interface clearly state that this action broadcasts the conversation globally. Privacy experts warn that this ambiguous design leads to inadvertent disclosures.

- Opaque “Share” Labeling: Unlike “Save” or “Export,” the “Share” label suggests sharing privately with friends or other apps, not public publication.

- Multi-Step Confirmation: Even after previewing the post, no in-app warning clarifies that the Discover feed is visible to all users, logged out or in.

- Conflated Defaults: Meta AI chats are set to private by default, but users who remain logged into any Meta service on the same device risk accidentally posting.

Rachel Tobac of Social Proof Security calls this “a UX failure that weaponizes convenience,” while the Electronic Privacy Information Center labels it “incredibly concerning” given the sensitive nature of some content.

Real-World Exposure: What’s Getting Leaked

A quick scan of the Discover feed reveals conversations no one intends to share:

- Medical Queries: Users describe post-surgery symptoms, chronic hives, and mental health struggles.

- Legal Advice: Teachers share their entire email threads about job-termination arbitrations, while landlords draft eviction notices.

- Personal Confessions: Affair admissions, faith crises, and financial anxieties appear alongside users’ visible Instagram or Facebook profiles.

- Illicit Planning: TechCrunch documented prompts on tax evasion strategies and fraudulent schemes—disclosures that could lead to real-world harm.

These examples underscore the severity: deeply private matters, once confined to a single device, are now archived and searchable by anyone, with no clear removal process.

Profile Linkage & Cross-Platform Risks

When users engage with Meta AI while logged into Facebook, Instagram, or WhatsApp, those chats can be mapped back to their real identity:

- Logged-In Defaults: Meta AI inherits the logged-in state, so a quick ChatGPT–style prompt is tied to your social profile.

- Discover Feed Access: Both logged-in and logged-out visitors can browse shared chats, meaning personal data leaks even outside one’s network.

- Reputation & Safety: Exposed personal information invites doxxing, social engineering, and targeted harassment—risks magnified when combined with existing social-account data.

Privacy professionals stress that linking AI chats to social identities without explicit consent violates basic expectations of separation between private seeking and public sharing.

Meta’s Response & User Remedies

Meta insists chats remain private unless users opt to share. In statements, a spokesperson highlighted a multi-step share process and pointed users to its Privacy Center. Yet no in-context warnings or default nudges explain the public nature of the Discover feed.

To protect your data:

- Disable Sharing in the App: In Meta AI’s settings, set your prompts to “Only Me.”

- Log Out Before You Chat: If you must use Meta AI, do so in a logged-out browser or incognito window.

- Opt Out of AI Training: Via Settings → Privacy Center → AI at Meta, submit an objection to prevent your WhatsApp and other conversations from model training.

- Delete Past Prompts: Use commands like

/reset-aiin Messenger or Instagram to purge your AI chat history.

While these steps offer temporary relief, they place the onus entirely on users to navigate a confusing interface.

Regulatory and Ethical Implications

Current data-protection laws—GDPR in Europe, CCPA in California—mandate clear user consent and transparent data usage. Meta AI’s Discover feed clashes with these principles by:

- Lacking Explicit Consent: Users are never clearly told their chats will be public.

- Obscuring Data Usage: Meta’s own chatbot admits conversations may train its models, yet does not clarify how shared chats differ from private ones.

- Insufficient Controls: The absence of real-time warnings or default privacy nudges violates emerging AI governance frameworks, such as the EU’s proposed AI Act.

Regulators must clarify that any AI platform offering public sharing needs explicit, contextual consent for every sensitive data point—no buried settings or post hoc opt-outs.

Designing AI with Privacy in Mind

Meta AI’s public chat exposure is a stark lesson in UX-driven privacy failures. Trust in AI hinges on clear boundaries between private inquiry and public broadcast. For Meta, the path forward requires redesigning the share workflow with unambiguous warnings, defaulting to privacy-by-design, and offering one-click retractions.

Only then can users safely explore AI’s promise without fearing that their most personal confessions become tomorrow’s headline.