- Document Integration: AI Mode now accepts desktop image uploads and will soon support PDFs, enabling AI-driven queries across user-provided files.

- Canvas Workflow: The new Canvas tool creates a dynamic side panel that saves, updates, and organizes multi-session plans for projects and trips.

- Live Video Search: Search Live adds real-time video input via Google Lens, allowing users to converse with AI about what their camera sees.

- Chrome AI Panel: A contextual “Ask Google about this page” feature in Chrome lets users highlight content for on-the-spot AI Overviews and follow-ups.

- Multi-Modal Future: These features signal Google’s shift toward search as an interactive, persistent, and multi-modal workspace rather than isolated queries.

Discover how AI Mode transforms search into an interactive workspace with file uploads, real-time video assistance, and Canvas.

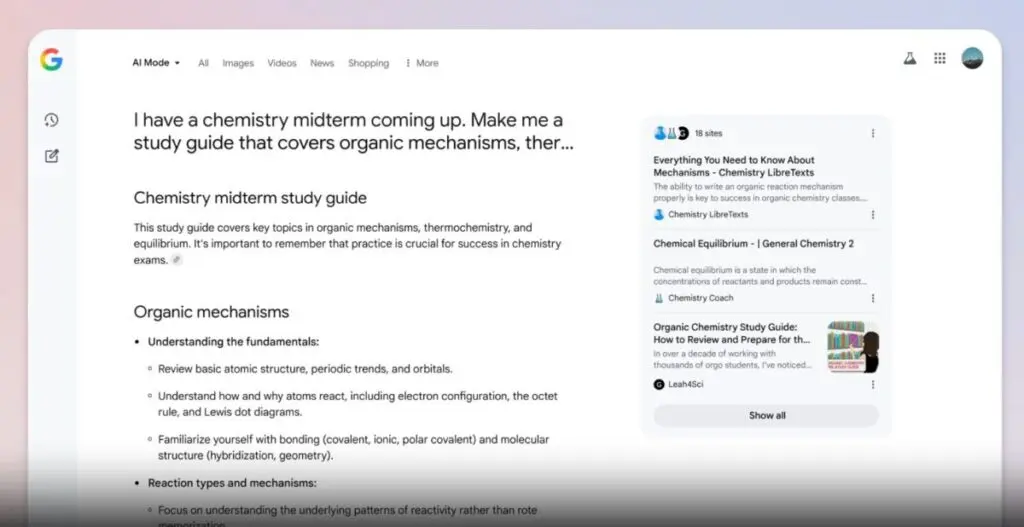

When Google first introduced AI Mode in Search earlier this year, it promised a more conversational, context-aware way to find information online. Rather than presenting users with a static list of blue links, AI Mode treated each query as part of an ongoing dialogue—summarizing web results, offering clarifications, and enabling follow-up questions.

The latest update takes that promise further still. By adding support for PDF and image uploads, integrating a persistent “Canvas” workspace, and weaving live video into the mix, Google is transforming Search into an interactive, multi-modal environment.

No longer is search just about typing a question and moving on; it’s about building, exploring, and creating over multiple sessions and across different media types.

Bringing Your Documents into the Conversation

One of the most transformative additions to AI Mode is the ability to upload files directly into the search experience. Until now, users could point their camera at an object or photograph and ask Google Lens to identify it.

But what if the information you need lives inside a PDF—lecture slides, technical specifications, or a research report? Soon, AI Mode on desktop will let you drag and drop those documents into the search window. From there, you can ask the AI to explain passages, compare sections, or cross-reference document content with broader web insights.

Source: Google

Imagine reading a dense policy paper: instead of hunting for context across multiple tabs, you simply upload the PDF and ask AI Mode to distill each section into plain English. The AI can pull in relevant news articles, tutorials, or academic commentary, weaving a narrative that bridges your file and the wider internet.

This feature is slated to support additional file formats in the coming months, with deeper integration into Drive and other parts of Google Workspace on the horizon.

Meanwhile, desktop users already have access to image upload support. Whether you need to understand a chart from a scanned document or decipher text from a photograph, you can now drop an image into Search and engage the AI directly.

This levels the playing field for anyone who encounters visual information—students grappling with handwritten notes, professionals reviewing scanned contracts, or hobbyists learning from screenshots.

Canvas: A Living, Breathing Planning Board

Search has always been episodic: you type, click, and then move on to a new tab. The new Canvas feature rewrites this script. When you’re planning a project—be it a trip, a study schedule, or even a complex proposal—AI Mode prompts you to “Create Canvas.”

A side panel appears, collecting key suggestions, links, and AI-generated outlines. As you refine your queries, the Canvas updates in real time, saving your progress across sessions.

Source: Google

Canvas becomes your personal research assistant. You might begin by asking for the best hiking routes in a national park, then explore recommended gear, lodging options, and safety guidelines—all without losing sight of your central plan.

Later, weeks down the road, you revisit the Canvas to adjust dates, swap out activities, or add new requirements. The tool retains context, merging fresh web data with your existing outline.

For educators and students, this means study guides that evolve automatically. Start with broad historical questions, drill into primary sources, and let the AI surface thematic connections. Canvas will assemble timelines, vocabulary lists, and suggested readings—then stay active as you prepare for exams.

The potential to streamline collaborative work is significant as well, since Canvases can be shared and built upon by multiple contributors, creating a living document that bridges individual research and collective insight.

Search Live: Real-Time Video Meets Conversational AI

Perhaps the most futuristic aspect of Google’s update is the introduction of Search Live with video input. Powered by the same technology behind Project Astra, this capability turns your phone into an interactive research tool. Within the Google app, a new Live tab joins Search and Translate. Tap Live, point your camera at a plant species, a foreign menu, or even a complex machine, and you can speak naturally to the AI.

“Hey Google, what ingredients are in this dish?” you might ask, pointing at a bowl of ramen. Or, “How do I fix this pipe joint?” while inspecting your toolbox. The AI analyzes each frame of video, grounding its responses in what the camera sees. Follow-up questions feel seamless: you don’t have to type a new query or describe the scene, because the AI already has your visual context.

For shoppers, this could mean instantly comparing product details on a shelf. For travelers, translating street signs and cultural customs as they happen. For professionals in the field—mechanics, architects, medical trainees—the ability to ask a real-time expert to interpret a diagram or piece of equipment could revolutionize on-the-job learning.

While initially limited to U.S. Labs participants, Search Live hints at a future where search happens in the world around you, not just on the screen in front of you.

AI at Your Fingertips in Chrome

Google Lens has quietly crept onto desktop in recent months, allowing users to right-click on images and ask what they are. Now, Lens and AI Mode integrate more tightly into Chrome itself. An “Ask Google about this page” option appears in the address bar dropdown. Select it, and a panel opens, inviting you to highlight any segment—text, image, or diagram—and receive an “AI Overview.”

This isn’t a one-and-done pop-up. Within that panel, you can dig deeper. A “Dive deeper” button takes you back to AI Mode, where follow-up questions get more detailed insight. Perhaps you’ve landed on a news article about a newly passed law.

Highlight the paragraph that interests you and ask for historical context, opposing viewpoints, or expert commentary. The AI stitches together the relevant data, creating a richer understanding without navigating away.

This integration foreshadows a world where every web page becomes an entry point to AI-driven exploration. Instead of bookmarking pages to revisit later, you save them to a Canvas or summon AI directly for clarification. Over time, this could alter how content is produced—writers may optimize not only for SEO, but for AI-friendly snippets that aid comprehension when queried.

A Multi-Modal Vision for the Future

Taken together, these updates show Google’s commitment to evolving Search beyond isolated queries. AI Mode transforms search into a workspace: one where text, voice, images, documents, and live video all converge under a unified conversational interface. Canvas ensures that user journeys are persistent and path-dependent, favoring long-term projects over single-topic lookups. Live video turns the physical world into searchable terrain.

For everyday users, these changes mean fewer context switches. Students, professionals, and casual explorers alike can approach search as a continuous dialogue that spans media types and time.

For content creators and SEO strategists, it signals a shift in optimization strategies—toward crafting content that supports AI understanding across file formats, anticipating how AI might summarize or contextualize their work.

While most features remain in early labs and U.S. testing, the trajectory is clear: search is becoming an immersive, interactive assistant rather than a passive index. As these tools roll out globally, they will redefine our expectations of information access and challenge us to rethink how we engage with the web.

Google’s latest AI Mode update doesn’t just tweak the edges of search—it redraws the boundaries of what search can be. By leveling up with file uploads, dynamic planning canvases, and live video assistance, Google is pioneering a new paradigm where knowledge is not merely found, but actively built, shared, and experienced in real time.