For brands that have built a presence on social media, growing that presence may be a top priority. However, equally important is maintaining a consistently good image.

Social media is great for building an online presence, but inherently risky. Plus, platforms like X have only a few thousand content moderators to deal with millions of users, making it crucial that businesses establish their own content moderation practices. Here’s an in-depth look at what content moderation is and how to carry it out on social media.

What’s Content Moderation? Benefits and Best Practices Explored:

What Is Content Moderation?

Content moderation is the process of monitoring whether content submitted to a website complies with the site’s rules and guidelines and is suitable to appear on the site.

Essentially, it helps to ensure that the content published on the site is not:

- Too sensitive

- Illegal

- Inappropriate

- Harmful

- Harassing to others

It’s commonly used by websites that rely heavily on user-generated content (UGC) such as:

- Forums

- Social media platforms

- Dating sites

- Online marketplaces

In the context of social media, content moderation is the process of reviewing and managing UGC on your brand’s social media page. Also called social media moderation, this could involve:

- Responding to comments made by users

- Reporting or removing posts and comments if they don’t comply with your brand’s guidelines

The Crucial Role of Content Moderation: Why It Matters Now More Than Ever

Content moderation is equally important for your brand and audience. Here’s why it should be a top priority when creating your brand’s social media strategy:

Safeguards your community

Once you’ve built a social media community of loyal fans and followers, you’d want to do everything to protect the community. This could be from blatantly harmful actions like hate speech and discriminatory language or it could be from spam and scams.

Scammers may be prowling the comment section for vulnerable people who may be in search of deals and discounts. Or they could comment about quick ways to make money or solve a certain problem like in the following example.

They could then target them by asking for personally identifiable information, which could be used maliciously.

Content moderation helps you detect misinformation and these types of harmful or hateful comments and manage them effectively.

Controls your brand image

Not only does hate speech threaten your followers’ safety, but also your brand. The comments on your brand’s social media page are a reflection of your brand image.

So, whether you agree with it or not, permitting hate speech or discriminatory language in your comments section reflects poorly on your brand. People are going to associate your brand with those comments.

In fact, data published by Statista Research Department reveals that 68% of adult consumers in the US highlighted hate speech and acts of aggression endangers brand safety.

The reality is that in this day and age hate speech is still prevalent, especially on Facebook, TikTok, and X. During the third quarter of 2023, Facebook removed nearly 10 million pieces of hate speech.

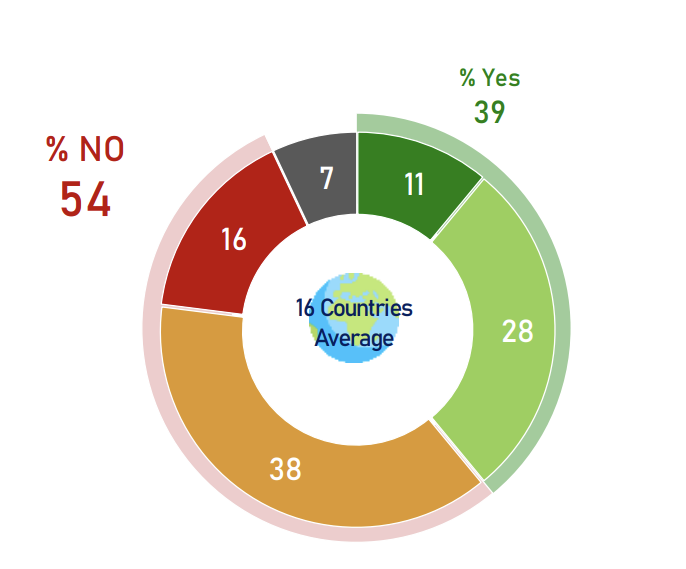

Yet, a global survey on the impact of online disinformation and hate speech conducted by UNESCO and IPSOS reveals that 54% of feel online platforms aren’t doing enough to deal with hate speech. This highlights the importance of content moderation for brands further.

Question: Do you think online platforms are doing enough to combat hate speech? (Whole sample)

Identifies issues

Whether it’s a typo in your caption, a broken link in your ad or simply a product suggestion, social media users are quick to point out issues that need fixing. In this Lululemon example, the issue luckily was just a lack of pockets.

Moreover, spikes in negative comments could also reveal bigger issues related to your brand and/or its products. Moderating comments is a great way to identify issues and resolve them before things escalate.

Keeps unauthorized selling in check

Large brands may often fall victim to counterfeiting and unauthorized reselling. And counterfeiters and unauthorized resellers could use your social media comments section to find potential buyers. Moderating your comments is crucial to keep those attempts in check and ensure that you don’t lose out on sales to competitors.

Ensures information accuracy

Your brand’s comment section can also invite trolls and malicious users who want to spread false information about your products or service. You may also get comments on inaccurate information regarding your products or service, such as the ingredients/materials used or how they should be used.

Whether these users mean actual harm to your brand or simply want to troll, they could create a negative brand perception and diminish brand trust.

In some cases, they could even cause actual harm to community members who follow their advice.

Human Moderation vs Automation: How Do You Moderate Content?

When it comes to content moderation, you basically have two ways to do it:

- Using an employee to moderate content manually

- Automating the bulk of the work with a content moderation tool

With manual moderation, every comment goes through proper review, but it can be time-consuming. As such, human moderation may only be suitable for small businesses that only see a handful of comments on their posts.

When the comments and conversations start to build up, brands will need to stay on top of those activities to ensure that no harmful comments fall through the cracks. To make it more challenging, they need to do so in near real-time so that problematic content doesn’t have time to cause damage to their community or their brand reputation.

Automated moderation using content moderation tools makes this mammoth job so much easier, making it the ideal option. The tool automatically screens each comment that’s submitted to your social media page and either approves or rejects it. This significantly speeds up the process of reviewing and managing comments and posts on your social media pages.

In some cases, the comments are also sent to a human moderator for additional review. This extra step is important when the tool can’t understand the nuances in the comment to assess whether it complies with the brand’s community guidelines.

If you’re already using a social media management tool, see if it comes with built-in content moderation features. Examples of social media management tools that include this functionality are:

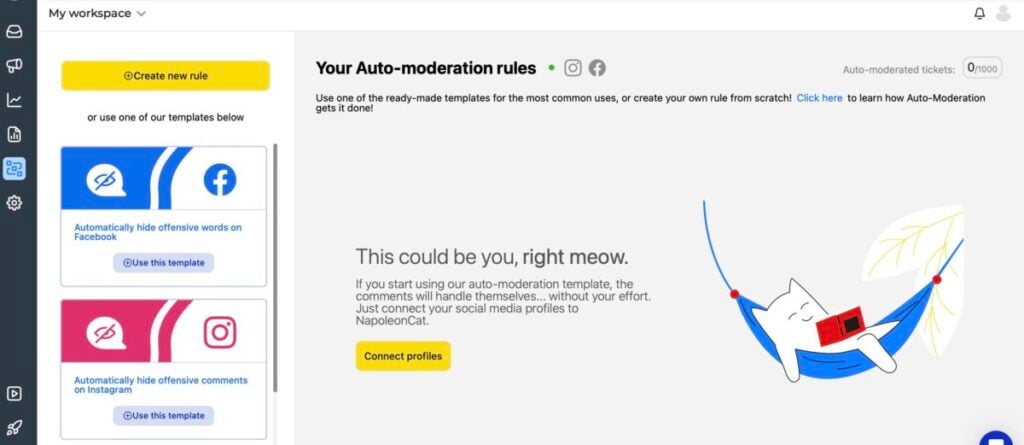

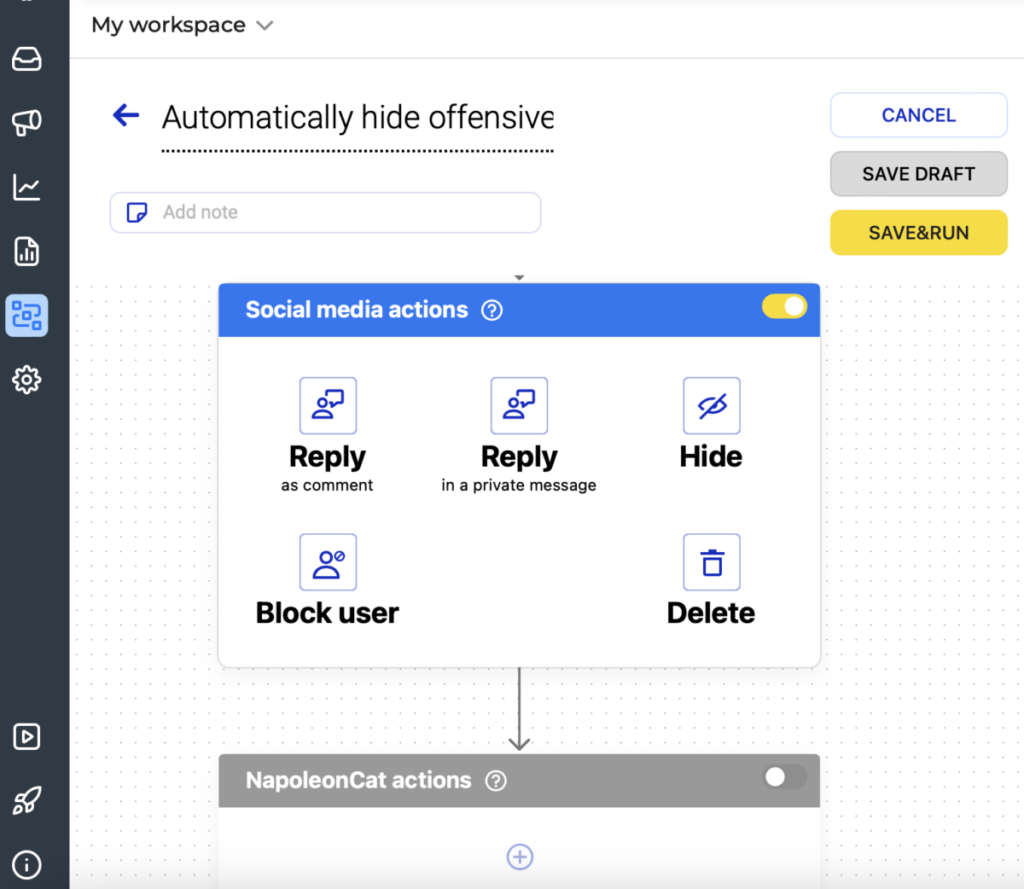

For example, with NapoleonCat you can manage comments, reviews, and messages across multiple social media channels. It offers templates that you can use to set up auto-moderation rules or create a rule from scratch.

Let’s say, you want to use it to hide offensive words automatically. In this case, you choose keywords that will trigger a specific social media action.

That said, it doesn’t just have to be swear words. You can also choose to hide comments with phrases like “click here” that have a high probability of spam.

Otherwise, you can check out content moderation tools that come with varying levels of moderation and different features like:

- Hive Moderation

- Besedo

- CommentGuard

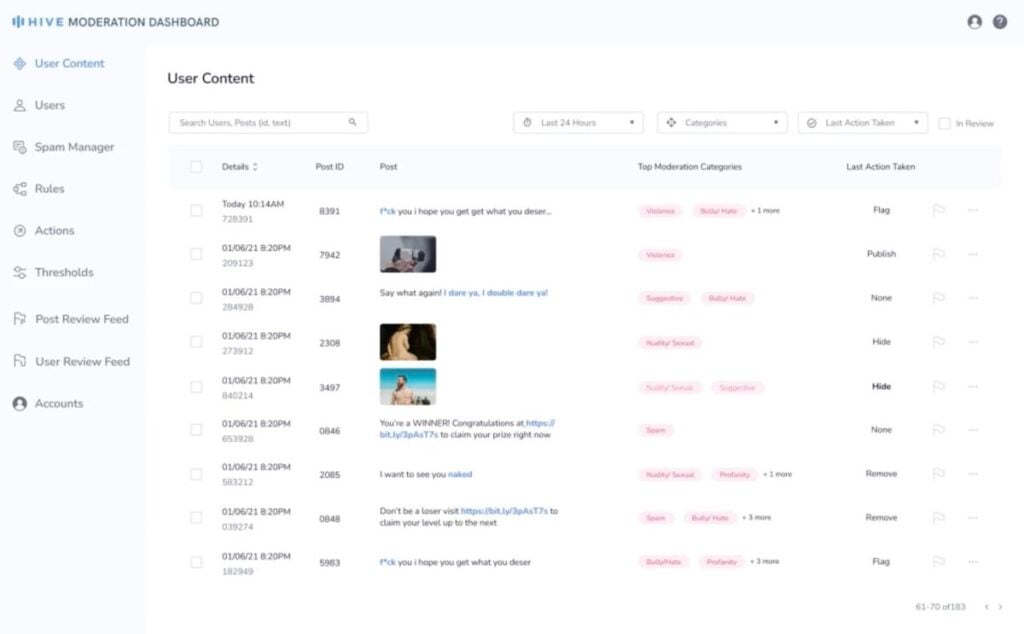

For example, Hive Moderation offers automated moderation but also lets you flag certain content for human review. You can set up custom actions in its interface and it will automatically apply these rules to images, texts or even video/audio files.

The ability to moderate visuals means that you can check profile pics which often go under the radar. That’s how Plato, a social gaming community, uses Hive Moderation.

In addition to profile pics, Plato also uses moderate group chats (over 10,000 photos and messages get checked daily). It has helped them to reduce user complaints about inappropriate content by as much as 90%.

What Are the Best Practices for Content Moderation?

A tool can only get you so far. The following best practices can also help brands to create a positive online environment:

Write clear community rules and guidelines

Start by defining what the rules and guidelines are so your audience knows exactly what kind of environment you want to create for your community. Use the following as guidance:

- Who your followers are

- Your brand personality

- The type of content you have on your page

For example, some brands may allow swear words (especially when used in a positive context). Other brands may not be as lenient.

In fact, an article exploring profanity’s impact on word of mouth published in the Journal of Marketing Research found that product reviews that contain a swear word or two are often viewed as more helpful.

That said, in other instances it’s more clear. We can all agree that certain offensive and discriminatory language like racist slurs should have no place no matter who the brand or target audience is.

To ensure transparency and prevent confusion, you may want to publish these rules and guidelines for your audience to see. On your website, you’ll typically share this in the footer area.

Source: hubspot.com

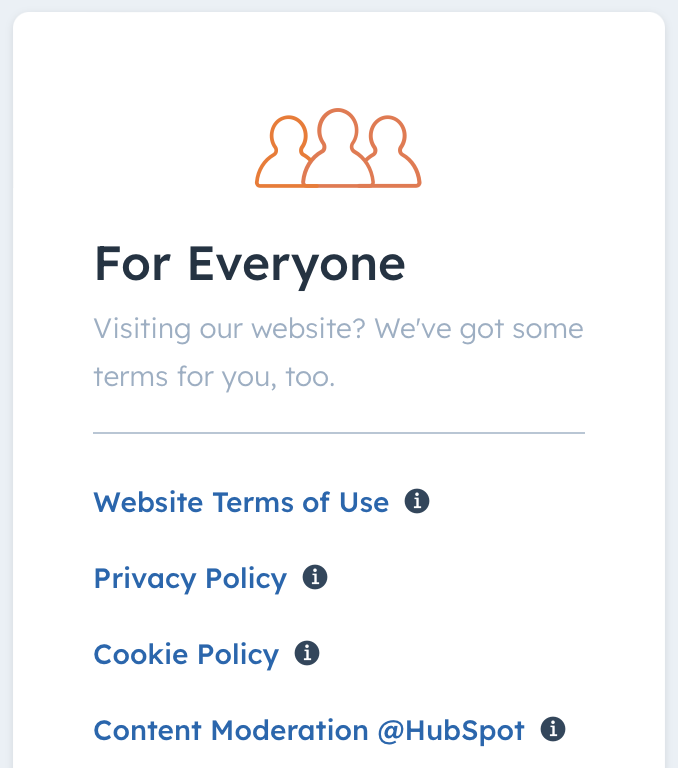

For example, HubSpot shares their rules on their website under Legal Stuff (found in the footer). When you click on the link, you’re redirected to their different legal documents which include a content moderation policy.

Interestingly, HubSpot’s policy also prohibits individuals or organizations that promote hate speech, discrimination, or violence through the distribution of UGC or their own content from using HubSpot.

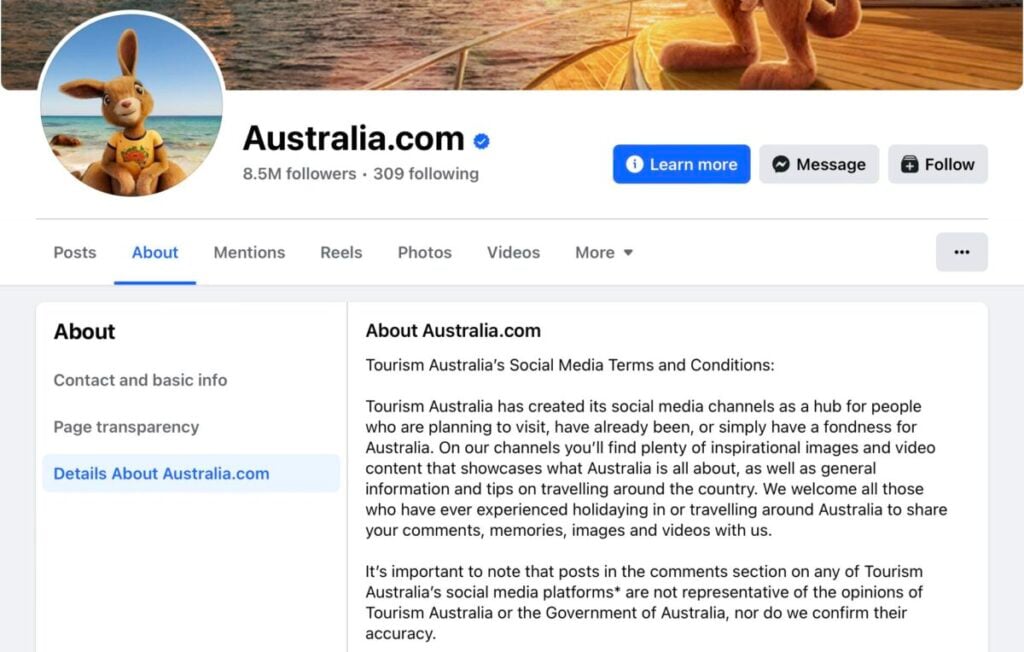

On Facebook, you can add this info in the “About” section of your page. This is what Tourism Australia has done.

They explain that it serves as a hub where people are welcome to share comments, videos, and images. While expression of opinions and open discussion are encouraged, they outline which types of posts won’t be tolerated. In addition to the standard rules, they also prohibit:

- Solicitations and advertisements

- Multiple, successive off-topic posts by the same user

- Content that infringes third-party intellectual property rights

When it comes to content moderation, you need to think further than just the obvious bad word. As mentioned earlier, spam and scams can also hurt brand trust and ruin the community experience. This makes Tourism Australia’s rules such a useful example of how detailed you should be.

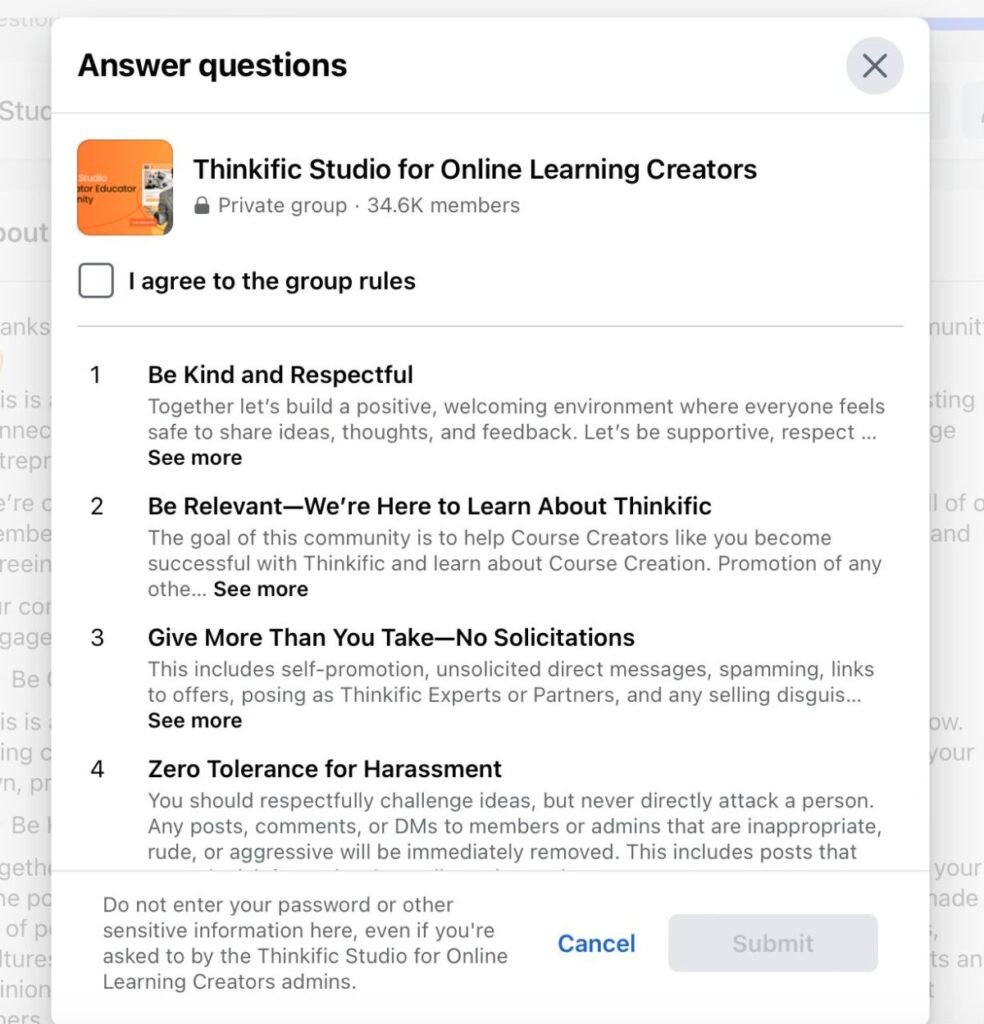

If you’re creating a Facebook private group, you can share it in the form of group rules. For example, the admins of Thinkific Studio for Online Learning Creators, share five key rules that group members must agree to before they can join.

Establish protocols for appropriate action

Once your rules and guidelines are established, you also need well-defined protocols on what actions to take when you come across content that violates those rules. This will bring clarity to the rest of the team on what warrants removal and what needs to be reported.

The protocol should define:

- What types of comments should be removed or rejected

- What types of comments should be kept, but responded to

- How to construct the responses

- When certain comments should be passed to a human moderator

In the Tourism Australia example above, the page also specifies what action will be taken when users violate the guidelines. These include:

- Deleting posts

- Blocking the user from following the brand’s social media profiles

- Referring the user to the relevant authorities (reserved for extreme circumstances)

Respond to negative comments

It may be instinctual to ban or remove every negative comment your brand receives, but this could sometimes do more harm than good. While it may seem counterintuitive to let negative comments remain on your page, those comments could prove to be an asset for your brand with the right response protocol in place.

Would your audience trust your brand if they notice that you’re removing every single negative comment?

If they can see that you’re trying to resolve customer complaints or clarify any confusion, it could do more for brand trust. It shows transparency and authenticity.

Here’s an example of how Domino’s responds to bad reviews. While they probably had every reason to unmention themselves in this message because it used a swear word, they didn’t.

Sorry for the trouble you experienced with this, Collin. Please DM us so we can help look into this further. *Ramea https://t.co/Cf8BiToVpb

— Domino's Pizza (@dominos) July 11, 2024

Your protocol referred to in the previous point should also outline how to deal with different types of negative comments.

For example, negative comments that are trying to spread false information about your products should receive a response setting the record straight. For a comment that points out a genuine mistake, you can respond by taking responsibility and explaining how you’ll try to fix it.

Encourage staff participation

Content moderation tends to be reactive. You wait for a negative comment and respond to it.

However, there’s some room for being proactive. Depending on the nature of your business, you could also have your staff set the tone for the type of conversations you want on your social media pages.

Seeing your staff start the conversation with entertaining and relevant comments could give people some direction on what types of comments they should leave. This is an effective way to minimize the need for moderation because most people will try to maintain the standard of conversation that’s been set.

This is what Buffer does well.

For example, in a LinkedIn Post, Buffer’s Director of Growth and Product Marketing, Simon Heaton, shares what Buffer’s own tech stack looks like. It sets the tone that Buffer is about offering value, whether it’s through their own software or the content that they share. They’re not there to take to social media to point out the inadequacies of other software, encouraging their followers to copy their example and post positive and constructive content.

Additionally, your staff could also provide informed answers to people who have doubts or questions in the comments. This helps keep things personal while helping you save tons of time in keeping on top of all those conversations.

Assign a content moderator

Next, it’s time to assign a content moderator and/or community manager to help you manage your online presence. Although you may rely heavily on a tool to automate your social media moderation, tools can’t fully replace humans.

A content moderator may be able to understand the nuances in a comment that an automated tool might miss. For example, a sarcastic comment about your service may be mistakenly approved by the moderation tool because of the positive words it contains.

As such, a content moderator ensures that the comments and posts on your social media page align with your brand’s image. They help to maintain a certain level of quality in the standard of conversations on your page so that brand perception remains positive.

Plus, when customers need more detailed responses, your default doesn’t need to be to refer them to your Help page. While you’re free to ignore those comments or provide copy-pasted responses, that won’t do much good if you want to build a strong and engaged community.

When going this route, it’s important to be clear about who’s responsible for what. For example, who responds to comments and who should look into the issues raised in the comments sections?

Look for opportunities to educate and engage your audience

Your comment section could be a goldmine for community engagement with people asking questions and looking for help. Make sure you’re actively trying to surface those comments and turn them into something valuable for your brand.

For example, someone could ask a question about a featured product, the ingredients you use or the sustainability goals you have. Answering those questions helps to not only educate the original commenter but also others who chance upon your conversation.

See the following conversation in which customers ask Everlane if the products featured in the Instagram posts are part of their range. By taking the time to read these comments, Everlane could provide the name of the product and point these customers in the right direction, possibly closer to checkout.

That said, it doesn’t always need to be about pushing a sale. Meow Meow Tweet cleverly uses comments to educate customers about how to care for their products, helping them to enjoy the ultimate experience.

Key Takeaways

Content moderation is critical for maintaining brand trust, upholding community standards, and ensuring safety. Implementing best practices in content moderation not only protects your online communities from inappropriate or harmful content, but also upholds the integrity of your brand.

While manual moderation has its place, automation is increasingly becoming the go-to solution for efficient and effective content management. Automated moderation can quickly filter out inappropriate content, helping to lessen the emotional toll.

That said, you’ll still need some human help to “read” the nuances.

Plus, it doesn’t just have to be about deleting social media posts and comments. Content moderation can also identify opportunities for brand growth. Engage with the user posts and see what you can learn about their needs.

Frequently Asked Questions

What does a content moderator do?

Content moderators work behind the scenes to help create a safe, positive online environment. Their job involves monitoring, reviewing and managing user-generated content (UGC) like images, videos, and comments. Once they identify inappropriate content, they need to take the prescribed action which can involve removing it, issuing a warning, or banning the user.

What are the challenges of content moderation?

Because of the sheer volumes of content that get uploaded, the challenge is to balance speed and accuracy. You’ll also need to balance freedom of speech and human safety. Sometimes the context is ambiguous and you need to keep in mind cultural nuances. If human moderation is used, there’s also an emotional toll of the continuous exposure to disturbing and graphic content.

Why is content moderation important for UGC campaigns?

Content moderation helps to maintain the integrity of your campaign and protect your brand’s reputation. UGC is great because it’s so authentic, but it can also include inappropriate or offensive content that you need to filter out. There are also legal and ethical standards that you need to keep in mind and content moderation can help to avoid penalties, even lawsuits.

Which tools are used for content moderation?

The following are examples of content moderation tools and software solutions:

- Hive Moderation

- Respondology’s The Mod™

- Besedo

- NapoleonCat

- Juicer